# GetDist Documentation

---

## https://getdist.readthedocs.io/en/latest/index.html

# GetDist

GetDist is a Python package for analysing and plotting Monte Carlo (or other) samples.

* [Introduction](intro.md)

* [Plot gallery and tutorial](https://getdist.readthedocs.io/en/latest/plot_gallery.html)

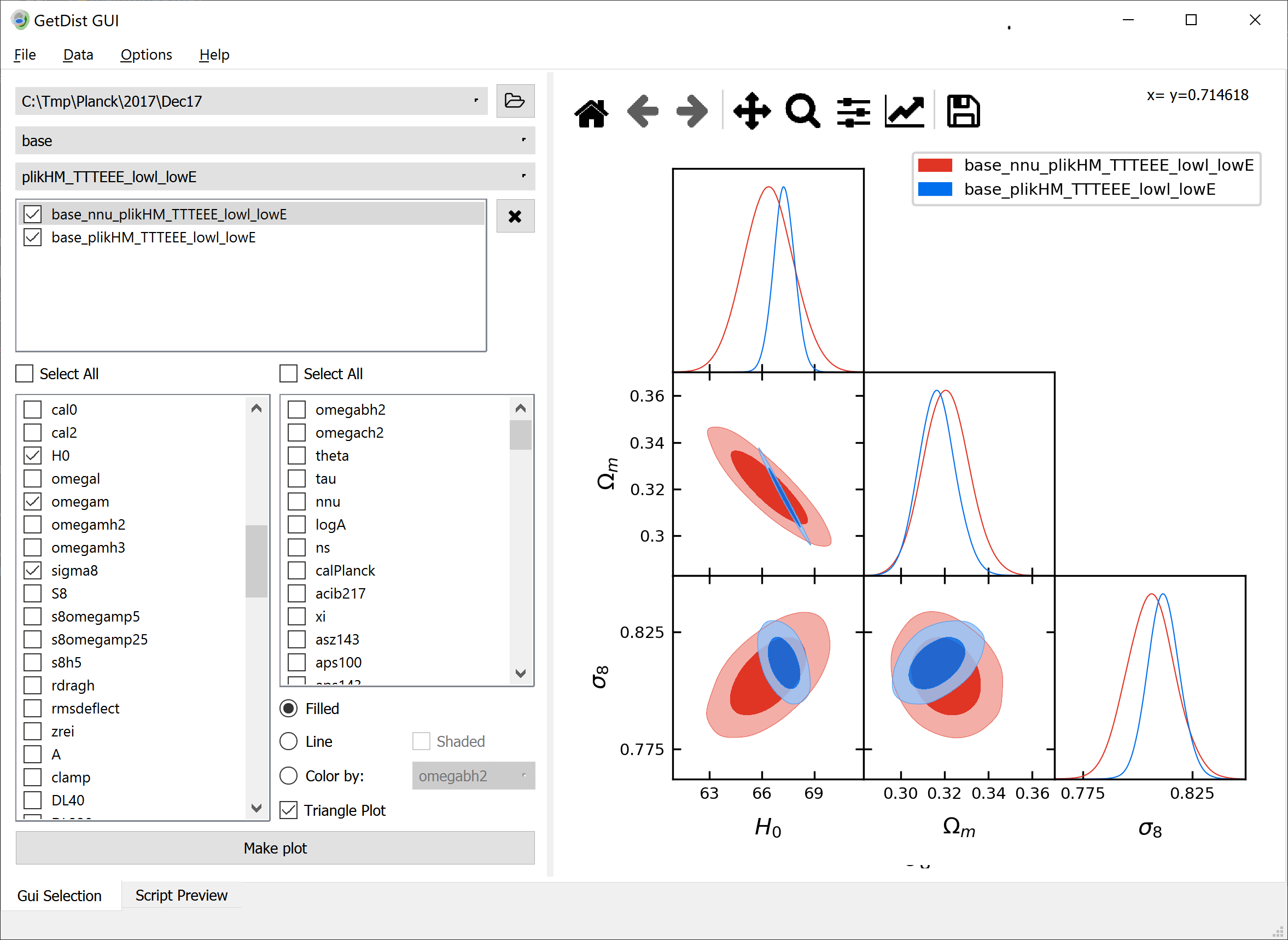

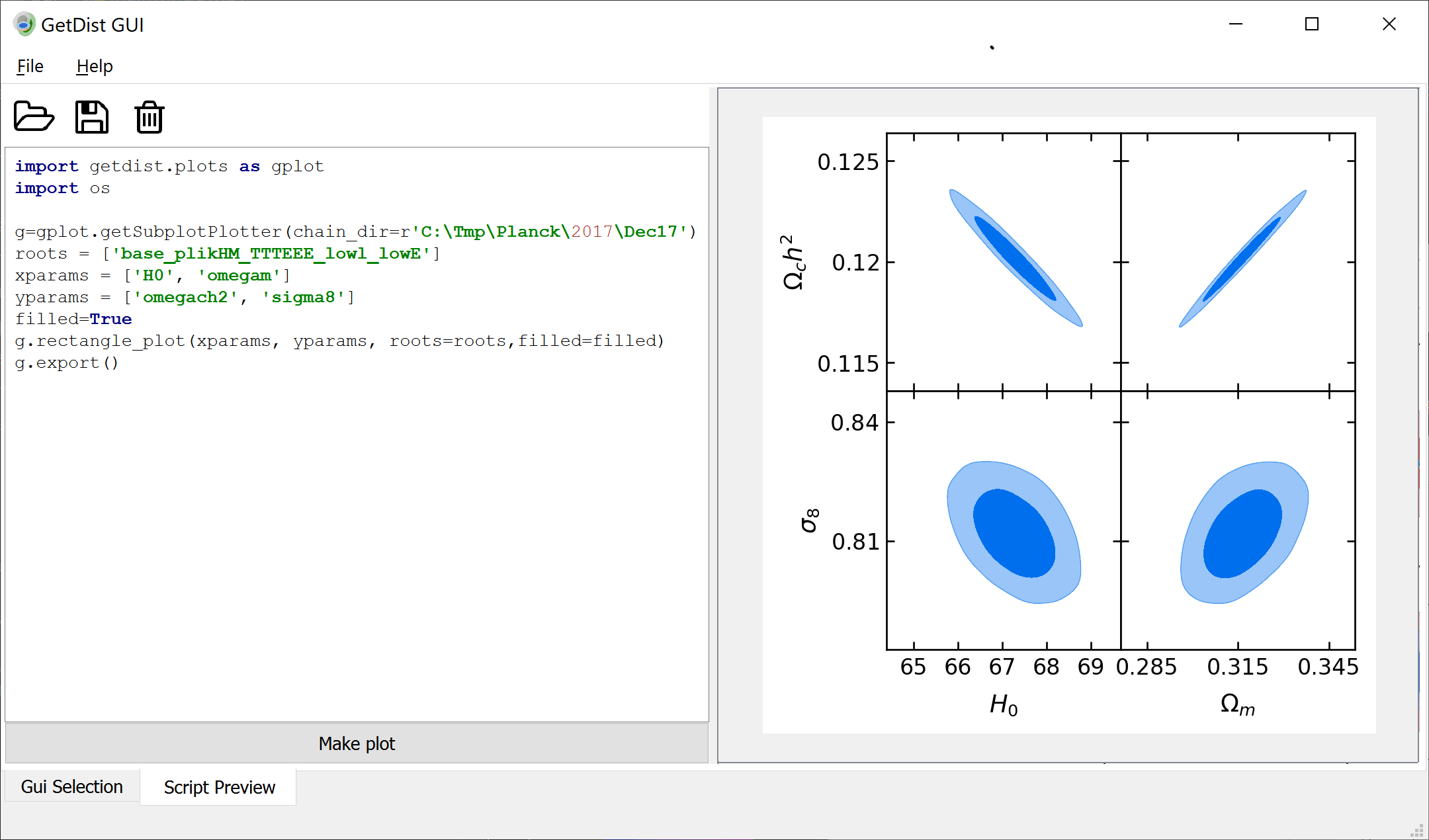

* [GetDist GUI program](gui.md)

* [Paper with technical details](https://arxiv.org/abs/1910.13970)

**LLM Integration**: For AI assistants and LLM agents, a comprehensive [LLM context document](https://getdist.readthedocs.io/en/latest/_static/getdist_docs_combined.md) can be used.

There’s also an [AI help assistant](https://cosmocoffee.info/help_assist.php) you can use to ask about the package.

High-level modules for analysing samples and plotting:

* [getdist.mcsamples](mcsamples.md)

* [`loadMCSamples()`](mcsamples.md#getdist.mcsamples.loadMCSamples)

* [`MCSamples`](mcsamples.md#getdist.mcsamples.MCSamples)

* [getdist.plots](plots.md)

* [getdist.plots.get_single_plotter](_summaries/getdist.plots.get_single_plotter.md)

* [getdist.plots.get_subplot_plotter](_summaries/getdist.plots.get_subplot_plotter.md)

* [getdist.plots.GetDistPlotter](_summaries/getdist.plots.GetDistPlotter.md)

* [getdist.plots.GetDistPlotSettings](_summaries/getdist.plots.GetDistPlotSettings.md)

* [`GetDistPlotError`](plots.md#getdist.plots.GetDistPlotError)

* [`GetDistPlotSettings`](plots.md#getdist.plots.GetDistPlotSettings)

* [`GetDistPlotter`](plots.md#getdist.plots.GetDistPlotter)

* [`MCSampleAnalysis`](plots.md#getdist.plots.MCSampleAnalysis)

* [`add_plotter_style()`](plots.md#getdist.plots.add_plotter_style)

* [`get_plotter()`](plots.md#getdist.plots.get_plotter)

* [`get_single_plotter()`](plots.md#getdist.plots.get_single_plotter)

* [`get_subplot_plotter()`](plots.md#getdist.plots.get_subplot_plotter)

* [`set_active_style()`](plots.md#getdist.plots.set_active_style)

See also:

* [Analysis settings](analysis_settings.md)

* [Using GetDist with MCMC sampler outputs](arviz_integration.md)

* [ArviZ Integration](arviz_integration.md#arviz-integration)

* [Basic Usage](arviz_integration.md#basic-usage)

* [PyMC Integration](arviz_integration.md#pymc-integration)

* [Example: Eight Schools Model](arviz_integration.md#example-eight-schools-model)

* [emcee Integration](arviz_integration.md#emcee-integration)

* [ArviZ Options](arviz_integration.md#arviz-options)

* [Custom Parameter Ranges](arviz_integration.md#custom-parameter-ranges)

* [Including Weights and Likelihoods](arviz_integration.md#including-weights-and-likelihoods)

* [Multi-dimensional Parameters](arviz_integration.md#multi-dimensional-parameters)

* [Burn in](arviz_integration.md#burn-in)

Other main modules:

* [getdist.chains](chains.md)

* [getdist.covmat](covmat.md)

* [getdist.densities](densities.md)

* [getdist.gaussian_mixtures](gaussian_mixtures.md)

* [getdist.inifile](inifile.md)

* [getdist.paramnames](paramnames.md)

* [getdist.parampriors](parampriors.md)

* [getdist.types](types.md)

* [Index](genindex.md)

---

[](https://www.sussex.ac.uk/astronomy/)[](https://erc.europa.eu/)[](https://stfc.ukri.org/)

## https://getdist.readthedocs.io/en/latest/mcsamples.html

# getdist.mcsamples

#### NOTE

**Important Convention**: In GetDist, the `loglikes` parameter and related variables represent

**-log(posterior)**, not -log(likelihood). The posterior is the product of likelihood and prior.

This means `loglikes` contains the negative logarithm of the full

posterior probability, including both the likelihood and any prior contributions.

### getdist.mcsamples.loadMCSamples(file_root: [str](https://docs.python.org/3/library/stdtypes.html#str), ini: [None](https://docs.python.org/3/library/constants.html#None) | [str](https://docs.python.org/3/library/stdtypes.html#str) | [IniFile](inifile.md#getdist.inifile.IniFile) = None, jobItem=None, no_cache=False, settings: [Mapping](https://docs.python.org/3/library/collections.abc.html#collections.abc.Mapping)[[str](https://docs.python.org/3/library/stdtypes.html#str), [Any](https://docs.python.org/3/library/typing.html#typing.Any)] | [None](https://docs.python.org/3/library/constants.html#None) = None, chain_exclude=None) → [MCSamples](#getdist.mcsamples.MCSamples)

Loads a set of samples from a file or files.

Sample files are plain text (*file_root.txt*) or a set of files (*file_root_1.txt*, *file_root_2.txt*, etc.).

Auxiliary files **file_root.paramnames** gives the parameter names

and (optionally) **file_root.ranges** gives hard prior parameter ranges.

For a description of the various analysis settings and default values see

[analysis_defaults.ini](https://getdist.readthedocs.io/en/latest/analysis_settings.html).

* **Parameters:**

* **file_root** – The root name of the files to read (no extension)

* **ini** – The name of a .ini file with analysis settings to use

* **jobItem** – an optional grid jobItem instance for a CosmoMC grid output

* **no_cache** – Indicates whether or not we should cache loaded samples in a pickle

* **settings** – dictionary of analysis settings to override defaults

* **chain_exclude** – A list of indexes to exclude, None to include all

* **Returns:**

The [`MCSamples`](#getdist.mcsamples.MCSamples) instance

### *class* getdist.mcsamples.MCSamples(root: [str](https://docs.python.org/3/library/stdtypes.html#str) | [None](https://docs.python.org/3/library/constants.html#None) = None, jobItem=None, ini=None, settings: [Mapping](https://docs.python.org/3/library/collections.abc.html#collections.abc.Mapping)[[str](https://docs.python.org/3/library/stdtypes.html#str), [Any](https://docs.python.org/3/library/typing.html#typing.Any)] | [None](https://docs.python.org/3/library/constants.html#None) = None, ranges=None, samples: [ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray) | [Iterable](https://docs.python.org/3/library/collections.abc.html#collections.abc.Iterable)[[ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray)] | [None](https://docs.python.org/3/library/constants.html#None) = None, weights: [ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray) | [Iterable](https://docs.python.org/3/library/collections.abc.html#collections.abc.Iterable)[[ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray)] | [None](https://docs.python.org/3/library/constants.html#None) = None, loglikes: [ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray) | [Iterable](https://docs.python.org/3/library/collections.abc.html#collections.abc.Iterable)[[ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray)] | [None](https://docs.python.org/3/library/constants.html#None) = None, temperature: [float](https://docs.python.org/3/library/functions.html#float) | [None](https://docs.python.org/3/library/constants.html#None) = None, \*\*kwargs)

The main high-level class for a collection of parameter samples.

Derives from [`chains.Chains`](chains.md#getdist.chains.Chains), adding high-level functions including

Kernel Density estimates, parameter ranges and custom settings.

For a description of the various analysis settings and default values see

[analysis_defaults.ini](https://getdist.readthedocs.io/en/latest/analysis_settings.html).

* **Parameters:**

* **root** – A root file name when loading from file

* **jobItem** – optional jobItem for parameter grid item. Should have jobItem.chainRoot and jobItem.batchPath

* **ini** – a .ini file to use for custom analysis settings

* **settings** – a dictionary of custom analysis settings

* **ranges** – a dictionary giving any additional hard prior bounds for parameters, or if periodic,

e.g. {‘x’:[0, 1], ‘y’:[None,2]}

If a parameter is periodic, use a triplet, e.g. {‘phi’: [0, 2\*np.pi, True]}

* **samples** – if not loading from file, array of parameter values for each sample, passed

to [`setSamples()`](#getdist.mcsamples.MCSamples.setSamples), or list of arrays if more than one chain

* **weights** – array of weights for samples, or list of arrays if more than one chain

* **loglikes** – array of -log(posterior) for samples, or list of arrays if more than one chain.

Note: this is the negative log posterior (likelihood × prior), not just the likelihood.

* **temperatute** – temperature of the sample. If not specified will be read from the

root.properties.ini file if it exists and otherwise default to 1.

* **kwargs** –

keyword arguments passed to inherited classes, e.g. to manually make a samples object from

: sample arrays in memory:

- **paramNamesFile**: optional name of .paramnames file with parameter names

- **names**: list of names for the parameters, or list of arrays if more than one chain

- **labels**: list of latex labels for the parameters (without $…$)

- **renames**: dictionary of parameter aliases

- **ignore_rows**:

> - if int >=1: The number of rows to skip at the file in the beginning of the file

> - if float <1: The fraction of rows to skip at the beginning of the file

- **label**: a latex label for the samples

- **name_tag**: a name tag for this instance

- **sampler**: string describing the type of samples; if “nested” or “uncorrelated”

the effective number of samples is calculated using uncorrelated approximation. If not specified

will be read from the root.properties.ini file if it exists and otherwise default to “mcmc”.

#### PCA(params, param_map=None, normparam=None, writeDataToFile=False, filename=None, conditional_params=(), n_best_only=None)

Perform principal component analysis (PCA). In other words,

get eigenvectors and eigenvalues for normalized variables

with optional (log modulus) mapping to find power law fits.

* **Parameters:**

* **params** – List of names of the parameters to use

* **param_map** – A transformation to apply to parameter values; A list or string containing

either N (no transformation) or L (for log transform) for each parameter.

By default, uses log if no parameter values cross zero

* **normparam** – optional name of parameter to normalize result (i.e. this parameter will have unit power)

* **writeDataToFile** – True to write the output to file.

* **filename** – The filename to write, by default root_name.PCA.

* **conditional_params** – optional list of parameters to treat as fixed,

i.e. for PCA conditional on fixed values of these parameters

* **n_best_only** – return just the short summary constraint for the tightest n_best_only constraints

* **Returns:**

a string description of the output of the PCA

#### addDerived(paramVec, name, label='', comment='', range=None)

Adds a new derived parameter

* **Parameters:**

* **paramVec** – The vector of parameter values to add. For example a combination of

parameter arrays from MCSamples.getParams()

* **name** – The name for the new parameter

* **label** – optional latex label for the parameter

* **comment** – optional comment describing the parameter

* **range** – if specified, a tuple of min, max values for the new parameter hard prior bounds

(either can be None for one-side bound)

* **Returns:**

The added parameter’s [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) object

#### changeSamples(samples)

Sets the samples without changing weights and loglikes.

* **Parameters:**

**samples** – The samples to set

#### confidence(paramVec, limfrac, upper=False, start=0, end=None, weights=None) → [ndarray](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html#numpy.ndarray)

Calculate sample confidence limits, not using kernel densities just counting samples in the tails

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **limfrac** – fraction of samples in the tail,

e.g. 0.05 for a 95% one-tail limit, or 0.025 for a 95% two-tail limit

* **upper** – True to get upper limit, False for lower limit

* **start** – Start index for the vector to use

* **end** – The end index, use None to go all the way to the end of the vector.

* **weights** – numpy array of weights for each sample, by default self.weights

* **Returns:**

confidence limit (parameter value when limfac of samples are further in the tail)

#### cool(cool=None)

Cools the samples, i.e. multiplies log likelihoods by cool factor and re-weights accordingly

:param cool: cool factor, optional if the sample has a temperature specified.

#### copy(label=None, settings=None) → [MCSamples](#getdist.mcsamples.MCSamples)

Create a copy of this sample object

* **Parameters:**

* **label** – optional lable for the new copy

* **settings** – optional modified settings for the new copy

* **Returns:**

copyied [`MCSamples`](#getdist.mcsamples.MCSamples) instance

#### corr(pars=None)

Get the correlation matrix

* **Parameters:**

**pars** – If specified, list of parameter vectors or int indices to use

* **Returns:**

The correlation matrix.

#### cov(pars=None, where=None)

Get parameter covariance

* **Parameters:**

* **pars** – if specified, a list of parameter vectors or int indices to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

The covariance matrix

#### deleteFixedParams()

Delete parameters that are fixed (the same value in all samples).

This includes parameters that are all NaN.

#### deleteZeros()

Removes samples with zero weight

#### filter(where)

Filter the stored samples to keep only samples matching filter

* **Parameters:**

**where** – list of sample indices to keep, or boolean array filter (e.g. x>5 to keep only samples where x>5)

#### get1DDensity(name, \*\*kwargs)

Returns a [`Density1D`](densities.md#getdist.densities.Density1D) instance for parameter with given name. Result is cached.

* **Parameters:**

* **name** – name of the parameter

* **kwargs** – arguments for [`get1DDensityGridData()`](#getdist.mcsamples.MCSamples.get1DDensityGridData)

* **Returns:**

A [`Density1D`](densities.md#getdist.densities.Density1D) instance for parameter with given name

#### get1DDensityGridData(j, paramConfid=None, meanlikes=False, \*\*kwargs)

Low-level function to get a [`Density1D`](densities.md#getdist.densities.Density1D) instance for the marginalized 1D density

of a parameter. Result is not cached.

* **Parameters:**

* **j** – a name or index of the parameter

* **paramConfid** – optional cached [`ParamConfidenceData`](chains.md#getdist.chains.ParamConfidenceData) instance

* **meanlikes** – include mean likelihoods

* **kwargs** –

optional settings to override instance settings of the same name (see analysis_settings):

- **smooth_scale_1D**

- **boundary_correction_order**

- **mult_bias_correction_order**

- **fine_bins**

- **num_bins**

* **Returns:**

A [`Density1D`](densities.md#getdist.densities.Density1D) instance

#### get2DDensity(x, y, normalized=False, \*\*kwargs)

Returns a [`Density2D`](densities.md#getdist.densities.Density2D) instance with marginalized 2D density.

* **Parameters:**

* **x** – index or name of x parameter

* **y** – index or name of y parameter

* **normalized** – if False, is normalized so the maximum is 1, if True, density is normalized

* **kwargs** – keyword arguments for the [`get2DDensityGridData()`](#getdist.mcsamples.MCSamples.get2DDensityGridData) function

* **Returns:**

[`Density2D`](densities.md#getdist.densities.Density2D) instance

#### get2DDensityGridData(j, j2, num_plot_contours=None, get_density=False, meanlikes=False, mask_function: callable = None, \*\*kwargs)

Low-level function to get 2D plot marginalized density and optional additional plot data.

* **Parameters:**

* **j** – name or index of the x parameter

* **j2** – name or index of the y parameter.

* **num_plot_contours** – number of contours to calculate and return in density.contours

* **get_density** – only get the 2D marginalized density, don’t calculate confidence level members

* **meanlikes** – calculate mean likelihoods as well as marginalized density

(returned as array in density.likes)

* **mask_function** – optional function, mask_function(minx, miny, stepx, stepy, mask),

which which sets mask to zero for values of parameters that are excluded by prior. Note this is not

needed for standard min, max bounds aligned with axes, as they are handled by default.

* **kwargs** –

optional settings to override instance settings of the same name (see analysis_settings):

- **fine_bins_2D**

- **boundary_correction_order**

- **mult_bias_correction_order**

- **smooth_scale_2D**

* **Returns:**

a [`Density2D`](densities.md#getdist.densities.Density2D) instance

#### getAutoBandwidth1D(bins, par, param, mult_bias_correction_order=None, kernel_order=1, N_eff=None)

Get optimized kernel density bandwidth (in units of the range of the bins)

Based on optimal Improved Sheather-Jones bandwidth for basic Parzen kernel, then scaled if higher-order method

being used. For details see the notes at [arXiv:1910.13970](https://arxiv.org/abs/1910.13970).

* **Parameters:**

* **bins** – numpy array of binned weights for the samples

* **par** – A [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) instance for the parameter to analyse

* **param** – index of the parameter to use

* **mult_bias_correction_order** – order of multiplicative bias correction (0 is basic Parzen kernel);

by default taken from instance settings.

* **kernel_order** – order of the kernel

(0 is Parzen, 1 does linear boundary correction, 2 is a higher-order kernel)

* **N_eff** – effective number of samples. If not specified estimated using weights, autocorrelations,

and fiducial bandwidth

* **Returns:**

kernel density bandwidth (in units the range of the bins)

#### getAutoBandwidth2D(bins, parx, pary, paramx, paramy, corr, rangex, rangey, base_fine_bins_2D, mult_bias_correction_order=None, min_corr=0.2, N_eff=None, use_2D_Neff=False)

Get optimized kernel density bandwidth matrix in parameter units, using Improved Sheather Jones method in

sheared parameters. The shearing is determined using the covariance, so you know the distribution is

multi-modal, potentially giving ‘fake’ correlation, turn off shearing by setting min_corr=1.

For details see the notes [arXiv:1910.13970](https://arxiv.org/abs/1910.13970).

* **Parameters:**

* **bins** – 2D numpy array of binned weights

* **parx** – A [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) instance for the x parameter

* **pary** – A [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) instance for the y parameter

* **paramx** – index of the x parameter

* **paramy** – index of the y parameter

* **corr** – correlation of the samples

* **rangex** – scale in the x parameter

* **rangey** – scale in the y parameter

* **base_fine_bins_2D** – number of bins to use for re-binning in rotated parameter space

* **mult_bias_correction_order** – multiplicative bias correction order (0 is Parzen kernel); by default taken

from instance settings

* **min_corr** – minimum correlation value at which to bother de-correlating the parameters

* **N_eff** – effective number of samples. If not specified, uses rough estimate that accounts for

weights and strongly-correlated nearby samples (see notes)

* **use_2D_Neff** – if N_eff not specified, whether to use 2D estimate of effective number, or approximate from

the 1D results (default from use_effective_samples_2D setting)

* **Returns:**

kernel density bandwidth matrix in parameter units

#### getAutocorrelation(paramVec, maxOff=None, weight_units=True, normalized=True)

Gets auto-correlation of an array of parameter values (e.g. for correlated samples from MCMC)

By default, uses weight units (i.e. standard units for separate samples from original chain).

If samples are made from multiple chains, neglects edge effects.

* **Parameters:**

* **paramVec** – an array of parameter values, or the int index of the parameter in stored samples to use

* **maxOff** – maximum autocorrelation distance to return

* **weight_units** – False to get result in sample point (row) units; weight_units=False gives standard

definition for raw chains

* **normalized** – Set to False to get covariance

(note even if normalized, corr[0]<>1 in general unless weights are unity).

* **Returns:**

zero-based array giving auto-correlations

#### getBestFit(max_posterior=True)

> Returns a [`BestFit`](types.md#getdist.types.BestFit) object with best-fit point stored in .minimum or .bestfit file

* **Parameters:**

**max_posterior** – whether to get maximum posterior (from .minimum file)

or maximum likelihood (from .bestfit file)

* **Returns:**

#### getBounds()

Returns the bounds in the form of a [`ParamBounds`](parampriors.md#getdist.parampriors.ParamBounds) instance, for example

for determining plot ranges

Bounds are not the same as self.ranges, as if samples are not near the range boundary, the bound is set to None

* **Returns:**

a [`ParamBounds`](parampriors.md#getdist.parampriors.ParamBounds) instance

#### getCombinedSamplesWithSamples(samps2, sample_weights=(1, 1))

Make a new [`MCSamples`](#getdist.mcsamples.MCSamples) instance by appending samples from samps2 for parameters which are in common.

By defaultm they are weighted so that the probability mass of each set of samples is the same,

independent of tha actual sample sizes. The sample_weights parameter can be adjusted to change the

relative weighting.

* **Parameters:**

* **samps2** – [`MCSamples`](#getdist.mcsamples.MCSamples) instance to merge

* **sample_weights** – relative weights for combining the samples. Set to None to just directly append samples.

* **Returns:**

a new [`MCSamples`](#getdist.mcsamples.MCSamples) instance with the combined samples

#### getConvergeTests(test_confidence=0.95, writeDataToFile=False, what=('MeanVar', 'GelmanRubin', 'SplitTest', 'RafteryLewis', 'CorrLengths'), filename=None, feedback=False)

Do convergence tests.

* **Parameters:**

* **test_confidence** – confidence limit to test for convergence (two-tail, only applies to some tests)

* **writeDataToFile** – True to write output to a file

* **what** –

The tests to run. Should be a list of any of the following:

- ’MeanVar’: Gelman-Rubin sqrt(var(chain mean)/mean(chain var)) test in individual parameters (multiple chains only)

- ’GelmanRubin’: Gelman-Rubin test for the worst orthogonalized parameter (multiple chains only)

- ’SplitTest’: Crude test for variation in confidence limits when samples are split up into subsets

- ’RafteryLewis’: [Raftery-Lewis test](https://stat.uw.edu/sites/default/files/files/reports/1991/tr212.pdf) (integer weight samples only)

- ’CorrLengths’: Sample correlation lengths

* **filename** – The filename to write to, default is file_root.converge

* **feedback** – If set to True, Prints the output as well as returning it.

* **Returns:**

text giving the output of the tests

#### getCorrelatedVariable2DPlots(num_plots=12, nparam=None)

Gets a list of most correlated variable pair names.

* **Parameters:**

* **num_plots** – The number of plots

* **nparam** – maximum number of pairs to get

* **Returns:**

list of [x,y] pair names

#### getCorrelationLength(j, weight_units=True, min_corr=0.05, corr=None)

Gets the auto-correlation length for parameter j

* **Parameters:**

* **j** – The index of the parameter to use

* **weight_units** – False to get result in sample point (row) units; weight_units=False gives standard

definition for raw chains

* **min_corr** – specifies a minimum value of the autocorrelation to use, e.g. where sampling noise is

typically as large as the calculation

* **corr** – The auto-correlation array to use, calculated internally by default

using [`getAutocorrelation()`](#getdist.mcsamples.MCSamples.getAutocorrelation)

* **Returns:**

the auto-correlation length

#### getCorrelationMatrix()

Get the correlation matrix of all parameters

* **Returns:**

The correlation matrix

#### getCov(nparam=None, pars=None)

Get covariance matrix of the parameters. By default, uses all parameters, or can limit to max number or list.

* **Parameters:**

* **nparam** – if specified, only use the first nparam parameters

* **pars** – if specified, a list of parameter indices (0,1,2..) to include

* **Returns:**

covariance matrix.

#### getCovMat()

Gets the CovMat instance containing covariance matrix for all the non-derived parameters

(for example useful for subsequent MCMC runs to orthogonalize the parameters)

* **Returns:**

A [`CovMat`](covmat.md#getdist.covmat.CovMat) object holding the covariance

#### getEffectiveSamples(j=0, min_corr=0.05)

Gets effective number of samples N_eff so that the error on mean of parameter j is sigma_j/N_eff

* **Parameters:**

* **j** – The index of the param to use.

* **min_corr** – the minimum value of the auto-correlation to use when estimating the correlation length

#### getEffectiveSamplesGaussianKDE(paramVec, h=0.2, scale=None, maxoff=None, min_corr=0.05)

Roughly estimate an effective sample number for use in the leading term for the MISE

(mean integrated squared error) of a Gaussian-kernel KDE (Kernel Density Estimate). This is used for

optimizing the kernel bandwidth, and though approximate should be better than entirely ignoring sample

correlations, or only counting distinct samples.

Uses fiducial assumed kernel scale h; result does depend on this (typically by factors O(2))

For bias-corrected KDE only need very rough estimate to use in rule of thumb for bandwidth.

In the limit h-> 0 (but still >0) answer should be correct (then just includes MCMC rejection duplicates).

In reality correct result for practical h should depend on shape of the correlation function.

If self.sampler is ‘nested’ or ‘uncorrelated’ return result for uncorrelated samples.

* **Parameters:**

* **paramVec** – parameter array, or int index of parameter to use

* **h** – fiducial assumed kernel scale.

* **scale** – a scale parameter to determine fiducial kernel width, by default the parameter standard deviation

* **maxoff** – maximum value of auto-correlation length to use

* **min_corr** – ignore correlations smaller than this auto-correlation

* **Returns:**

A very rough effective sample number for leading term for the MISE of a Gaussian KDE.

#### getEffectiveSamplesGaussianKDE_2d(i, j, h=0.3, maxoff=None, min_corr=0.05)

Roughly estimate an effective sample number for use in the leading term for the 2D MISE.

If self.sampler is ‘nested’ or ‘uncorrelated’ return result for uncorrelated samples.

* **Parameters:**

* **i** – parameter array, or int index of first parameter to use

* **j** – parameter array, or int index of second parameter to use

* **h** – fiducial assumed kernel scale.

* **maxoff** – maximum value of auto-correlation length to use

* **min_corr** – ignore correlations smaller than this auto-correlation

* **Returns:**

A very rough effective sample number for leading term for the MISE of a Gaussian KDE.

#### getFractionIndices(weights, n)

Calculates the indices of weights that split the weights into sets of equal 1/n fraction of the total weight

* **Parameters:**

* **weights** – array of weights

* **n** – number of groups to split into

* **Returns:**

array of indices of the boundary rows in the weights array

#### getGelmanRubin(nparam=None, chainlist=None)

Assess the convergence using the maximum var(mean)/mean(var) of orthogonalized parameters

c.f. Brooks and Gelman 1997.

* **Parameters:**

* **nparam** – The number of parameters, by default uses all

* **chainlist** – list of [`WeightedSamples`](chains.md#getdist.chains.WeightedSamples), the samples to use. Defaults to all the

separate chains in this instance.

* **Returns:**

The worst var(mean)/mean(var) for orthogonalized parameters. Should be <<1 for good convergence.

#### getGelmanRubinEigenvalues(nparam=None, chainlist=None)

Assess convergence using var(mean)/mean(var) in the orthogonalized parameters

c.f. Brooks and Gelman 1997.

* **Parameters:**

* **nparam** – The number of parameters (starting at first), by default uses all of them

* **chainlist** – list of [`WeightedSamples`](chains.md#getdist.chains.WeightedSamples), the samples to use.

Defaults to all the separate chains in this instance.

* **Returns:**

array of var(mean)/mean(var) for orthogonalized parameters

#### getInlineLatex(param, limit=1, err_sig_figs=None)

Get snippet like: A=x\\pm y. Will adjust appropriately for one and two tail limits.

* **Parameters:**

* **param** – The name of the parameter

* **limit** – which limit to get, 1 is the first (default 68%), 2 is the second

(limits array specified by self.contours)

* **err_sig_figs** – significant figures in the error

* **Returns:**

The tex snippet.

#### getLabel()

Return the latex label for the samples

* **Returns:**

the label

#### getLatex(params=None, limit=1, err_sig_figs=None)

Get tex snippet for constraints on a list of parameters

* **Parameters:**

* **params** – list of parameter names, or a single parameter name

* **limit** – which limit to get, 1 is the first (default 68%), 2 is the second

(limits array specified by self.contours)

* **err_sig_figs** – significant figures in the error

* **Returns:**

labels, texs: a list of parameter labels, and a list of tex snippets,

or for a single parameter, the latex snippet.

#### getLikeStats()

Get best fit sample and n-D confidence limits, and various likelihood based statistics

* **Returns:**

a [`LikeStats`](types.md#getdist.types.LikeStats) instance storing N-D limits for parameter i in

result.names[i].ND_limit_top, result.names[i].ND_limit_bot, and best-fit sample value

in result.names[i].bestfit_sample

#### getLower(name)

Return the lower limit of the parameter with the given name.

* **Parameters:**

**name** – parameter name

* **Returns:**

The lower limit if name exists, None otherwise.

#### getMargeStats(include_bestfit=False)

Returns a [`MargeStats`](types.md#getdist.types.MargeStats) object with marginalized 1D parameter constraints

* **Parameters:**

**include_bestfit** – if True, set best fit values by loading from root_name.minimum file (assuming it exists)

* **Returns:**

A [`MargeStats`](types.md#getdist.types.MargeStats) instance

#### getMeans(pars=None)

Gets the parameter means, from saved array if previously calculated.

* **Parameters:**

**pars** – optional list of parameter indices to return means for

* **Returns:**

numpy array of parameter means

#### getName()

Returns the name tag of these samples.

* **Returns:**

The name tag

#### getNumSampleSummaryText()

Returns a summary text describing numbers of parameters and samples,

and various measures of the effective numbers of samples.

* **Returns:**

The summary text as a string.

#### getParamBestFitDict(best_sample=False, want_derived=True, want_fixed=True, max_posterior=True)

Gets a dictionary of parameter values for the best fit point,

assuming calculated results from mimimization runs in .minimum (max posterior) .bestfit (max likelihood)

files exists.

Can also get the best-fit (max posterior) sample, which typically has a likelihood that differs significantly

from the true best fit in high dimensions.

* **Parameters:**

* **best_sample** – load from global minimum files (False, default) or using maximum posterior sample (True)

* **want_derived** – include derived parameters

* **want_fixed** – also include values of any fixed parameters

* **max_posterior** – whether to get maximum posterior (from .minimum file) or maximum likelihood

(from .bestfit file)

* **Returns:**

dictionary of parameter values

#### getParamNames()

Get [`ParamNames`](paramnames.md#getdist.paramnames.ParamNames) object with names for the parameters

* **Returns:**

[`ParamNames`](paramnames.md#getdist.paramnames.ParamNames) object giving parameter names and labels

#### getParamSampleDict(ix, want_derived=True, want_fixed=True)

Gets a dictionary of parameter values for sample number ix

* **Parameters:**

* **ix** – index of the sample to return (zero based)

* **want_derived** – include derived parameters

* **want_fixed** – also include values of any fixed parameters

* **Returns:**

dictionary of parameter values

#### getParams()

Creates a [`ParSamples`](chains.md#getdist.chains.ParSamples) object, with variables giving vectors for all the parameters,

for example samples.getParams().name1 would be the vector of samples with name ‘name1’

* **Returns:**

A [`ParSamples`](chains.md#getdist.chains.ParSamples) object containing all the parameter vectors, with attributes

given by the parameter names

#### getRawNDDensity(xs, normalized=False, \*\*kwargs)

Returns a `DensityND` instance with marginalized ND density.

* **Parameters:**

* **xs** – indices or names of x_i parameters

* **kwargs** – keyword arguments for the `getNDDensityGridData()` function

* **normalized** – if False, is normalized so the maximum is 1, if True, density is normalized

* **Returns:**

[`DensityND`](densities.md#getdist.densities.DensityND) instance

#### getRawNDDensityGridData(js, writeDataToFile=False, num_plot_contours=None, get_density=False, meanlikes=False, maxlikes=False, \*\*kwargs)

Low-level function to get unsmooth ND plot marginalized

density and optional additional plot data.

* **Parameters:**

* **js** – vector of names or indices of the x_i parameters

* **writeDataToFile** – save outputs to file

* **num_plot_contours** – number of contours to calculate and return in density.contours

* **get_density** – only get the ND marginalized density, no additional plot data, no contours.

* **meanlikes** – calculate mean likelihoods as well as marginalized density

(returned as array in density.likes)

* **maxlikes** – calculate the profile likelihoods in addition to the others

(returned as array in density.maxlikes)

* **kwargs** – optional settings to override instance settings of the same name (see analysis_settings):

* **Returns:**

a [`DensityND`](densities.md#getdist.densities.DensityND) instance

#### getRenames()

Gets dictionary of renames known to each parameter.

#### getSeparateChains() → [list](https://docs.python.org/3/library/stdtypes.html#list)[[WeightedSamples](chains.md#getdist.chains.WeightedSamples)]

Gets a list of samples for separate chains.

If the chains have already been combined, uses the stored sample offsets to reconstruct the array

(generally no array copying)

* **Returns:**

The list of [`WeightedSamples`](chains.md#getdist.chains.WeightedSamples) for each chain.

#### getSignalToNoise(params, noise=None, R=None, eigs_only=False)

Returns w, M, where w is the eigenvalues of the signal to noise (small y better constrained)

* **Parameters:**

* **params** – list of parameters indices to use

* **noise** – noise matrix

* **R** – rotation matrix, defaults to inverse of Cholesky root of the noise matrix

* **eigs_only** – only return eigenvalues

* **Returns:**

w, M, where w is the eigenvalues of the signal to noise (small y better constrained)

#### getTable(columns=1, include_bestfit=False, \*\*kwargs)

Creates and returns a [`ResultTable`](types.md#getdist.types.ResultTable) instance. See also [`getInlineLatex()`](#getdist.mcsamples.MCSamples.getInlineLatex).

* **Parameters:**

* **columns** – number of columns in the table

* **include_bestfit** – True to include the bestfit parameter values (assuming set)

* **kwargs** – arguments for [`ResultTable`](types.md#getdist.types.ResultTable) constructor.

* **Returns:**

A [`ResultTable`](types.md#getdist.types.ResultTable) instance

#### getUpper(name)

Return the upper limit of the parameter with the given name.

* **Parameters:**

**name** – parameter name

* **Returns:**

The upper limit if name exists, None otherwise.

#### getVars()

Get the parameter variances

* **Returns:**

A numpy array of variances.

#### get_norm(where=None)

gets the normalization, the sum of the sample weights: sum_i w_i

* **Parameters:**

**where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

normalization

#### initParamConfidenceData(paramVec, start=0, end=None, weights=None)

Initialize cache of data for calculating confidence intervals

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **start** – The sample start index to use

* **end** – The sample end index to use, use None to go all the way to the end of the vector

* **weights** – A numpy array of weights for each sample, defaults to self.weights

* **Returns:**

[`ParamConfidenceData`](chains.md#getdist.chains.ParamConfidenceData) instance

#### initParameters(ini)

Initializes settings.

Gets parameters from [`IniFile`](inifile.md#getdist.inifile.IniFile).

* **Parameters:**

**ini** – The [`IniFile`](inifile.md#getdist.inifile.IniFile) to be used

#### loadChains(root, files_or_samples: [Sequence](https://docs.python.org/3/library/collections.abc.html#collections.abc.Sequence), weights=None, loglikes=None, ignore_lines=None)

Loads chains from files.

* **Parameters:**

* **root** – Root name

* **files_or_samples** – list of file names or list of arrays of samples, or single array of samples

* **weights** – if loading from arrays of samples, corresponding list of arrays of weights

* **loglikes** – if loading from arrays of samples, corresponding list of arrays of -log(posterior)

* **ignore_lines** – Amount of lines at the start of the file to ignore, None not to ignore any

* **Returns:**

True if loaded successfully, False if none loaded

#### makeSingle()

Combines separate chains into one samples array, so self.samples has all the samples

and this instance can then be used as a general [`WeightedSamples`](chains.md#getdist.chains.WeightedSamples) instance.

* **Returns:**

self

#### makeSingleSamples(filename='', single_thin=None, random_state=None)

Make file of unit weight samples by choosing samples

with probability proportional to their weight.

If you just want the indices of the samples use

[`random_single_samples_indices()`](chains.md#getdist.chains.WeightedSamples.random_single_samples_indices) instead.

* **Parameters:**

* **filename** – The filename to write to, leave empty if no output file is needed

* **single_thin** – factor to thin by; if not set generates as many samples as it can

up to self.max_scatter_points

* **random_state** – random seed or Generator

* **Returns:**

numpy array of selected weight-1 samples if no filename

#### mean(paramVec, where=None)

Get the mean of the given parameter vector.

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

parameter mean

#### mean_diff(paramVec, where=None)

Calculates an array of differences between a parameter vector and the mean parameter value

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

array of p_i - mean(p_i)

#### mean_diffs(pars: [None](https://docs.python.org/3/library/constants.html#None) | [int](https://docs.python.org/3/library/functions.html#int) | [Sequence](https://docs.python.org/3/library/collections.abc.html#collections.abc.Sequence) = None, where=None) → [Sequence](https://docs.python.org/3/library/collections.abc.html#collections.abc.Sequence)

Calculates a list of parameter vectors giving distances from parameter means

* **Parameters:**

* **pars** – if specified, list of parameter vectors or int parameter indices to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

list of arrays p_i-mean(p-i) for each parameter

#### parLabel(i)

Gets the latex label of the parameter

* **Parameters:**

**i** – The index or name of a parameter.

* **Returns:**

The parameter’s label.

#### parName(i, starDerived=False)

Gets the name of i’th parameter

* **Parameters:**

* **i** – The index of the parameter

* **starDerived** – add a star at the end of the name if the parameter is derived

* **Returns:**

The name of the parameter (string)

#### random_single_samples_indices(random_state=None, thin: [float](https://docs.python.org/3/library/functions.html#float) | [None](https://docs.python.org/3/library/constants.html#None) = None, max_samples: [int](https://docs.python.org/3/library/functions.html#int) | [None](https://docs.python.org/3/library/constants.html#None) = None)

Returns an array of sample indices that give a list of weight-one samples, by randomly

selecting samples depending on the sample weights

* **Parameters:**

* **random_state** – random seed or Generator

* **thin** – additional thinning factor (>1 to get fewer samples)

* **max_samples** – optional parameter to thin to get a specified mean maximum number of samples

* **Returns:**

array of sample indices

#### readChains(files_or_samples, weights=None, loglikes=None)

Loads samples from a list of files or array(s), removing burn in,

deleting fixed parameters, and combining into one self.samples array

* **Parameters:**

* **files_or_samples** – The list of file names to read, samples or list of samples

* **weights** – array of weights if setting from arrays

* **loglikes** – array of -log(posterior) if setting from arrays

* **Returns:**

self.

#### removeBurn(remove=0.3)

removes burn in from the start of the samples

* **Parameters:**

**remove** – fraction of samples to remove, or if int >1, the number of sample rows to remove

#### removeBurnFraction(ignore_frac)

Remove a fraction of the samples as burn in

* **Parameters:**

**ignore_frac** – fraction of sample points to remove from the start of the samples, or each chain

if not combined

#### reweightAddingLogLikes(logLikes)

Importance sample the samples, by adding logLike (array of -log(likelihood values)) to the currently

stored likelihoods, and re-weighting accordingly, e.g. for adding a new data constraint.

* **Parameters:**

**logLikes** – array of -log(likelihood) for each sample to adjust

#### saveAsText(root, chain_index=None, make_dirs=False)

Saves the samples as text files, including parameter names as .paramnames file.

* **Parameters:**

* **root** – The root name to use

* **chain_index** – Optional index to be used for the filename, zero based, e.g. for saving one

of multiple chains

* **make_dirs** – True if this should (recursively) create the directory if it doesn’t exist

#### savePickle(filename)

Save the current object to a file in pickle format

* **Parameters:**

**filename** – The file to write to

#### saveTextMetadata(root, properties=None)

Saves metadata about the sames to text files with given file root

* **Parameters:**

* **root** – root file name

* **properties** – optional dictiory of values to save in root.properties.ini

#### setColData(coldata, are_chains=True)

Set the samples given an array loaded from file

* **Parameters:**

* **coldata** – The array with columns of [weights, -log(Likelihoods)] and sample parameter values

* **are_chains** – True if coldata starts with two columns giving weight and -log(Likelihood)

#### setDiffs()

saves self.diffs array of parameter differences from the y, e.g. to later calculate variances etc.

* **Returns:**

array of differences

#### setMeans()

Calculates and saves the means of the samples

* **Returns:**

numpy array of parameter means

#### setMinWeightRatio(min_weight_ratio=1e-30)

Removes samples with weight less than min_weight_ratio times the maximum weight

* **Parameters:**

**min_weight_ratio** – minimum ratio to max to exclude

#### setParamNames(names=None)

Sets the names of the params.

* **Parameters:**

**names** – Either a [`ParamNames`](paramnames.md#getdist.paramnames.ParamNames) object, the name of a .paramnames file to load, a list

of name strings, otherwise use default names (param1, param2…).

#### setParams(obj)

Adds array variables obj.name1, obj.name2 etc., where

obj.name1 is the vector of samples with name ‘name1’

if a parameter name is of the form aa.bb.cc, it makes subobjects so that you can reference obj.aa.bb.cc.

If aa.bb and aa are both parameter names, then aa becomes obj.aa.value.

* **Parameters:**

**obj** – The object instance to add the parameter vectors variables

* **Returns:**

The obj after alterations.

#### setRanges(ranges)

Sets the ranges parameters, e.g. hard priors on positivity etc.

If a min or max value is None, then it is assumed to be unbounded.

* **Parameters:**

**ranges** – A list or a tuple of [min,max] values for each parameter,

or a dictionary giving [min,max] values for specific parameter names.

For periodic parameters use dictionary with [min, max, True] entry.

#### setSamples(samples, weights=None, loglikes=None, min_weight_ratio=None)

Sets the samples from numpy arrays

* **Parameters:**

* **samples** – The sample values, n_samples x n_parameters numpy array, or can be a list of parameter vectors

* **weights** – Array of weights for each sample. Defaults to 1 for all samples if unspecified.

* **loglikes** – Array of -log(posterior) values for each sample.

* **min_weight_ratio** – remove samples with weight less than min_weight_ratio of the maximum

#### std(paramVec, where=None)

Get the standard deviation of the given parameter vector.

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

parameter standard deviation.

#### thin(factor: [int](https://docs.python.org/3/library/functions.html#int))

Thin the samples by the given factor, giving set of samples with unit weight

* **Parameters:**

**factor** – The factor to thin by

#### thin_indices(factor, weights=None)

Indices to make single weight 1 samples. Assumes integer weights.

* **Parameters:**

* **factor** – The factor to thin by, should be int.

* **weights** – The weights to thin, None if this should use the weights stored in the object.

* **Returns:**

array of indices of samples to keep

#### *static* thin_indices_and_weights(factor, weights)

Returns indices and new weights for use when thinning samples.

* **Parameters:**

* **factor** – thin factor

* **weights** – initial weight (counts) per sample point

* **Returns:**

(unique index, counts) tuple of sample index values to keep and new weights

#### twoTailLimits(paramVec, confidence)

Calculates two-tail equal-area confidence limit by counting samples in the tails

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **confidence** – confidence limit to calculate, e.g. 0.95 for 95% confidence

* **Returns:**

min, max values for the confidence interval

#### updateBaseStatistics()

Updates basic computed statistics (y, covariance etc.), e.g. after a change in samples or weights

* **Returns:**

self

#### updateRenames(renames)

Updates the renames known to each parameter with the given dictionary of renames.

#### updateSettings(settings: [Mapping](https://docs.python.org/3/library/collections.abc.html#collections.abc.Mapping)[[str](https://docs.python.org/3/library/stdtypes.html#str), [Any](https://docs.python.org/3/library/typing.html#typing.Any)] | [None](https://docs.python.org/3/library/constants.html#None) = None, ini: [None](https://docs.python.org/3/library/constants.html#None) | [str](https://docs.python.org/3/library/stdtypes.html#str) | [IniFile](inifile.md#getdist.inifile.IniFile) = None, doUpdate=True)

Updates settings from a .ini file or dictionary

* **Parameters:**

* **settings** – A dict containing settings to set, taking preference over any values in ini

* **ini** – The name of .ini file to get settings from, or an [`IniFile`](inifile.md#getdist.inifile.IniFile) instance; by default

uses current settings

* **doUpdate** – True if we should update internal computed values, False otherwise (e.g. if you want to make

other changes first)

#### var(paramVec, where=None)

Get the variance of the given parameter vector.

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

parameter variance

#### weighted_sum(paramVec, where=None)

Calculates the weighted sum of a parameter vector, sum_i w_i p_i

* **Parameters:**

* **paramVec** – array of parameter values or int index of parameter to use

* **where** – if specified, a filter for the samples to use

(where x>=5 would mean only process samples with x>=5).

* **Returns:**

weighted sum

#### weighted_thin(factor: [int](https://docs.python.org/3/library/functions.html#int))

Thin the samples by the given factor, giving (in general) non-unit integer weights.

This function also preserves separate chains.

* **Parameters:**

**factor** – The (integer) factor to thin by

#### writeCorrelationMatrix(filename=None)

Write the correlation matrix to a file

* **Parameters:**

**filename** – The file to write to, If none writes to file_root.corr

#### writeCovMatrix(filename=None)

Writes the covrariance matrix of non-derived parameters to a file.

* **Parameters:**

**filename** – The filename to write to; default is file_root.covmat

#### writeThinData(fname, thin_ix, cool=1)

Writes samples at thin_ix to file

* **Parameters:**

* **fname** – The filename to write to.

* **thin_ix** – Indices of the samples to write

* **cool** – if not 1, cools the samples by this factor

### *exception* getdist.mcsamples.MCSamplesError

An Exception that is raised when there is an error inside the MCSamples class.

### *exception* getdist.mcsamples.ParamError

An Exception that indicates a bad parameter.

### *exception* getdist.mcsamples.SettingError

An Exception that indicates bad settings.

## https://getdist.readthedocs.io/en/latest/plots.html

# getdist.plots

This module is used for making plots from samples. The [`get_single_plotter()`](#getdist.plots.get_single_plotter) and [`get_subplot_plotter()`](#getdist.plots.get_subplot_plotter) functions are used to make a plotter instance,

which is then used to make and export plots.

Many plotter functions take a **roots** argument, which is either a root name for

some chain files, or an in-memory [`MCSamples`](mcsamples.md#getdist.mcsamples.MCSamples) instance. You can also make comparison plots by giving a list of either of these.

Parameter are referenced simply by name (as specified in the .paramnames file when loading from file, or set in the [`MCSamples`](mcsamples.md#getdist.mcsamples.MCSamples) instance).

For functions that takes lists of parameters, these can be just lists of names.

You can also use glob patterns to match specific subsets of parameters (e.g. *x\** to match all parameters with names starting with *x*).

| [`get_single_plotter`](#getdist.plots.get_single_plotter) | Get a [`GetDistPlotter`](#getdist.plots.GetDistPlotter) for making a single plot of fixed width. |

|-------------------------------------------------------------|----------------------------------------------------------------------------------------------------|

| [`get_subplot_plotter`](#getdist.plots.get_subplot_plotter) | Get a [`GetDistPlotter`](#getdist.plots.GetDistPlotter) for making an array of subplots. |

| [`GetDistPlotter`](#getdist.plots.GetDistPlotter) | Main class for making plots from one or more sets of samples. |

| [`GetDistPlotSettings`](#getdist.plots.GetDistPlotSettings) | Settings class (colors, sizes, font, styles etc.) |

### *exception* getdist.plots.GetDistPlotError

An exception that is raised when there is an error plotting

### *class* getdist.plots.GetDistPlotSettings(subplot_size_inch: [float](https://docs.python.org/3/library/functions.html#float) = 2, fig_width_inch: [float](https://docs.python.org/3/library/functions.html#float) | [None](https://docs.python.org/3/library/constants.html#None) = None)

Settings class (colors, sizes, font, styles etc.)

* **Variables:**

* **alpha_factor_contour_lines** – alpha factor for adding contour lines between filled contours

* **alpha_filled_add** – alpha for adding filled contours to a plot

* **axes_fontsize** – Size for axis font at reference axis size

* **axes_labelsize** – Size for axis label font at reference axis size

* **axis_marker_color** – The color for a marker

* **axis_marker_ls** – The line style for a marker

* **axis_marker_lw** – The line width for a marker

* **axis_tick_powerlimits** – exponents at which to use scientific notation for axis tick labels

* **axis_tick_max_labels** – maximum number of tick labels per axis

* **axis_tick_step_groups** – steps to try for axis ticks, in grouped in order of preference

* **axis_tick_x_rotation** – The rotation for the x tick label in degrees

* **axis_tick_y_rotation** – The rotation for the y tick label in degrees

* **colorbar_axes_fontsize** – size for tick labels on colorbar (None for default to match axes font size)

* **colorbar_label_pad** – padding for the colorbar label

* **colorbar_label_rotation** – angle to rotate colorbar label (set to zero if -90 default gives layout problem)

* **colorbar_tick_rotation** – angle to rotate colorbar tick labels

* **colormap** – a [Matplotlib color map](https://www.scipy.org/Cookbook/Matplotlib/Show_colormaps) for shading

* **colormap_scatter** – a Matplotlib [color map](https://www.scipy.org/Cookbook/Matplotlib/Show_colormaps)

for 3D scatter plots

* **constrained_layout** – use matplotlib’s constrained-layout to fit plots within the figure and avoid overlaps.

* **fig_width_inch** – The width of the figure in inches

* **figure_legend_frame** – draw box around figure legend

* **figure_legend_loc** – The location for the figure legend

* **figure_legend_ncol** – number of columns for figure legend (set to zero to use defaults)

* **fontsize** – font size for text (and ultimate fallback when others not set)

* **legend_colored_text** – use colored text for legend labels rather than separate color blocks

* **legend_fontsize** – The font size for the legend (defaults to fontsize)

* **legend_frac_subplot_margin** – fraction of subplot size to use for spacing figure legend above plots

* **legend_frame** – draw box around legend

* **legend_loc** – The location for the legend

* **legend_rect_border** – whether to have black border around solid color boxes in legends

* **line_dash_styles** – dict mapping line styles to detailed dash styles,

default: {’–’: (3, 2), ‘-.’: (4, 1, 1, 1)}

* **line_labels** – True if you want to automatically add legends when adding more than one line to subplots

* **line_styles** – list of default line styles/colors ([‘-k’, ‘-r’, ‘–C0’, …]) or name of a standard colormap

(e.g. tab10), or a list of tuples of line styles and colors for each line

* **linewidth** – relative linewidth (at reference size)

* **linewidth_contour** – linewidth for lines in filled contours

* **linewidth_meanlikes** – linewidth for mean likelihood lines

* **no_triangle_axis_labels** – whether subplots in triangle plots should show axis labels if not at the edge

* **norm_1d_density** – whether to normolize 1D densities (otherwise normalized to unit peak value)

* **norm_prob_label** – label for the y axis in normalized 1D density plots

* **num_plot_contours** – number of contours to plot in 2D plots (up to number of contours in analysis settings)

* **num_shades** – number of distinct colors to use for shading shaded 2D plots

* **param_names_for_labels** – file name of .paramnames file to use for overriding parameter labels for plotting

* **plot_args** – dict, or list of dicts, giving settings like color, ls, alpha, etc. to apply for a plot or each

line added

* **plot_meanlikes** – include mean likelihood lines in 1D plots

* **prob_label** – label for the y axis in unnormalized 1D density plots

* **prob_y_ticks** – show ticks on y axis for 1D density plots

* **progress** – write out some status

* **scaling** – True to scale down fonts and lines for smaller subplots; False to use fixed sizes.

* **scaling_max_axis_size** – font sizes will only be scaled for subplot widths (in inches) smaller than this.

* **scaling_factor** – factor by which to multiply the difference of the axis size to the reference size when

scaling font sizes

* **scaling_reference_size** – axis width (in inches) at which font sizes are specified.

* **direct_scaling** – True to directly scale the font size with the axis size for small axes (can be very small)

* **scatter_size** – size of points in “3D” scatter plots

* **shade_level_scale** – shading contour colors are put at [0:1:spacing]\*\*shade_level_scale

* **shade_meanlikes** – 2D shading uses mean likelihoods rather than marginalized density

* **solid_colors** – List of default colors for filled 2D plots or the name of a colormap (e.g. tab10). If a list,

each element is either a color, or a tuple of values for different contour levels.

* **solid_contour_palefactor** – factor by which to make 2D outer filled contours paler when only specifying

one contour color

* **subplot_size_ratio** – ratio of width and height of subplots

* **tight_layout** – use tight_layout to layout, avoid overlaps and remove white space; if it doesn’t work

try constrained_layout. If true it is applied when calling [`finish_plot()`](#getdist.plots.GetDistPlotter.finish_plot)

(which is called automatically by plots_xd(), triangle_plot and rectangle_plot).

* **title_limit** – show parameter limits over 1D plots, 1 for first limit (68% default), 2 second, etc.

* **title_limit_labels** – whether or not to include parameter label when adding limits above 1D plots

* **title_limit_fontsize** – font size to use for limits in plot titles (defaults to axes_labelsize)

If fig_width_inch set, fixed setting for fixed total figure size in inches.

Otherwise, use subplot_size_inch to determine default font sizes etc.,

and figure will then be as wide as necessary to show all subplots at specified size.

* **Parameters:**

* **subplot_size_inch** – Determines the size of subplots, and hence default font sizes

* **fig_width_inch** – The width of the figure in inches, If set, forces fixed total size.

#### rc_sizes(axes_fontsize=None, lab_fontsize=None, legend_fontsize=None)

Sets the font sizes by default from matplotlib.rcParams defaults

* **Parameters:**

* **axes_fontsize** – The font size for the plot axes tick labels (default: xtick.labelsize).

* **lab_fontsize** – The font size for the plot’s axis labels (default: axes.labelsize)

* **legend_fontsize** – The font size for the plot’s legend (default: legend.fontsize)

#### set_with_subplot_size(size_inch=3.5, size_mm=None, size_ratio=None)

Sets the subplot’s size, either in inches or in millimeters.

If both are set, uses millimeters.

* **Parameters:**

* **size_inch** – The size to set in inches; is ignored if size_mm is set.

* **size_mm** – None if not used, otherwise the size in millimeters we want to set for the subplot.

* **size_ratio** – ratio of height to width of subplots

### *class* getdist.plots.GetDistPlotter(chain_dir: [str](https://docs.python.org/3/library/stdtypes.html#str) | [Iterable](https://docs.python.org/3/library/collections.abc.html#collections.abc.Iterable)[[str](https://docs.python.org/3/library/stdtypes.html#str)] | [None](https://docs.python.org/3/library/constants.html#None) = None, settings: [GetDistPlotSettings](#getdist.plots.GetDistPlotSettings) | [None](https://docs.python.org/3/library/constants.html#None) = None, analysis_settings: [str](https://docs.python.org/3/library/stdtypes.html#str) | [dict](https://docs.python.org/3/library/stdtypes.html#dict) | [IniFile](inifile.md#getdist.inifile.IniFile) = None, auto_close=False)

Main class for making plots from one or more sets of samples.

* **Variables:**

* **settings** – a [`GetDistPlotSettings`](#getdist.plots.GetDistPlotSettings) instance with settings

* **subplots** – a 2D array of [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) for subplots

* **sample_analyser** – a [`MCSampleAnalysis`](#getdist.plots.MCSampleAnalysis) instance for getting [`MCSamples`](mcsamples.md#getdist.mcsamples.MCSamples)

and derived data from a given root name tag (e.g. sample_analyser.samples_for_root(‘rootname’))

* **Parameters:**

* **chain_dir** – Set this to a directory or grid directory hierarchy to search for chains

(can also be a list of such, searched in order)

* **analysis_settings** – The settings to be used by [`MCSampleAnalysis`](#getdist.plots.MCSampleAnalysis) when analysing samples

* **auto_close** – whether to automatically close the figure whenever a new plot made or this instance released

#### add_1d(root, param, plotno=0, normalized=None, ax=None, title_limit=None, \*\*kwargs)

Low-level function to add a 1D marginalized density line to a plot

* **Parameters:**

* **root** – The root name of the samples

* **param** – The parameter name

* **plotno** – The index of the line being added to the plot

* **normalized** – True if areas under the curves should match, False if normalized to unit maximum.

Default from settings.norm_1d_density.

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **title_limit** – if not None, a maginalized limit (1,2..) to print as the title of the plot

* **kwargs** – arguments for [`plot()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.plot.html#matplotlib.pyplot.plot)

* **Returns:**

min, max for the plotted density

#### add_2d_contours(root, param1=None, param2=None, plotno=0, of=None, cols=None, contour_levels=None, add_legend_proxy=True, param_pair=None, density=None, alpha=None, ax=None, mask_function: callable = None, \*\*kwargs)

Low-level function to add 2D contours to plot for samples with given root name and parameters

* **Parameters:**

* **root** – The root name of samples to use or a [`MixtureND`](gaussian_mixtures.md#getdist.gaussian_mixtures.MixtureND) gaussian mixture

* **param1** – x parameter

* **param2** – y parameter

* **plotno** – The index of the contour lines being added

* **of** – the total number of contours being added (this is line plotno of `of`)

* **cols** – optional list of colors to use for contours, by default uses default for this plotno

* **contour_levels** – levels at which to plot the contours, by default given by contours array in

the analysis settings

* **add_legend_proxy** – True to add a proxy to the legend of this plot.

* **param_pair** – an [x,y] parameter name pair if you prefer to provide this rather than param1 and param2

* **density** – optional [`Density2D`](densities.md#getdist.densities.Density2D) to plot rather than that computed automatically

from the samples

* **alpha** – alpha for the contours added

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **mask_function** – optional function, mask_function(minx, miny, stepx, stepy, mask),

which which sets mask to zero for values of parameter name that are excluded by prior.

This is used to correctly estimate densities near the boundary.

See the example in the plot gallery.

* **kwargs** –

optional keyword arguments:

- **filled**: True to make filled contours

- **color**: top color to automatically make paling contour colours for a filled plot

- kwargs for [`contour()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.contour.html#matplotlib.pyplot.contour) and [`contourf()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.contourf.html#matplotlib.pyplot.contourf)

* **Returns:**

bounds (from [`bounds()`](densities.md#getdist.densities.GridDensity.bounds)) for the 2D density plotted

#### add_2d_covariance(means, cov, xvals=None, yvals=None, def_width=4.0, samples_per_std=50.0, \*\*kwargs)

Plot 2D Gaussian ellipse. By default, plots contours for 1 and 2 sigma.

Specify contour_levels argument to plot other contours (for density normalized to peak at unity).

* **Parameters:**

* **means** – array of y

* **cov** – the 2x2 covariance

* **xvals** – optional array of x values to evaluate at

* **yvals** – optional array of y values to evaluate at

* **def_width** – if evaluation array not specified, width to use in units of standard deviation

* **samples_per_std** – if evaluation array not specified, number of grid points per standard deviation

* **kwargs** – keyword arguments for `add_2D_contours()`

#### add_2d_density_contours(density, \*\*kwargs)

Low-level function to add 2D contours to a plot using provided density

* **Parameters:**

* **density** – a [`densities.Density2D`](densities.md#getdist.densities.Density2D) instance

* **kwargs** – arguments for [`add_2d_contours()`](#getdist.plots.GetDistPlotter.add_2d_contours)

* **Returns:**

bounds (from `bounds()`) of density

#### add_2d_scatter(root, x, y, color='k', alpha=1, extra_thin=1, scatter_size=None, ax=None)

Low-level function to add a 2D sample scatter plot to the current axes (or ax if specified).

* **Parameters:**

* **root** – The root name of the samples to use

* **x** – name of x parameter

* **y** – name of y parameter

* **color** – color to plot the samples

* **alpha** – The alpha to use.

* **extra_thin** – thin the weight one samples by this additional factor before plotting

* **scatter_size** – point size (default: settings.scatter_size)

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **Returns:**

(xmin, xmax), (ymin, ymax) bounds for the axes.

#### add_2d_shading(root, param1, param2, colormap=None, density=None, ax=None, \*\*kwargs)

Low-level function to add 2D density shading to the given plot.

* **Parameters:**

* **root** – The root name of samples to use

* **param1** – x parameter

* **param2** – y parameter

* **colormap** – color map, default to settings.colormap (see [`GetDistPlotSettings`](#getdist.plots.GetDistPlotSettings))

* **density** – optional user-provided [`Density2D`](densities.md#getdist.densities.Density2D) to plot rather than

the auto-generated density from the samples

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **kwargs** – keyword arguments for [`contourf()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.contourf.html#matplotlib.pyplot.contourf)

#### add_3d_scatter(root, params, color_bar=True, alpha=1, extra_thin=1, scatter_size=None, ax=None, alpha_samples=False, \*\*kwargs)

Low-level function to add a 3D scatter plot to the current axes (or ax if specified).

Here 3D means a 2D plot, with samples colored by a third parameter.

* **Parameters:**

* **root** – The root name of the samples to use

* **params** – list of parameters to plot

* **color_bar** – True to add a colorbar for the plotted scatter color

* **alpha** – The alpha to use.

* **extra_thin** – thin the weight one samples by this additional factor before plotting

* **scatter_size** – point size (default: settings.scatter_size)

* **alpha_samples** – use all samples, giving each point alpha corresponding to relative weight

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **kwargs** – arguments for [`add_colorbar()`](#getdist.plots.GetDistPlotter.add_colorbar)

* **Returns:**

(xmin, xmax), (ymin, ymax) bounds for the axes.

#### add_bands(x, y, errors, color='gray', nbands=2, alphas=(0.25, 0.15, 0.1), lw=0.2, lw_center=None, linecolor='k', ax=None)

Add a constraint band as a function of x showing e.g. a 1 and 2 sigma range.

* **Parameters:**

* **x** – array of x values

* **y** – array of central values for the band as function of x

* **errors** – array of errors as a function of x

* **color** – a fill color

* **nbands** – number of bands to plot. If errors are 1 sigma, using nbands=2 will plot 1 and 2 sigma.

* **alphas** – tuple of alpha factors to use for each error band

* **lw** – linewidth for the edges of the bands

* **lw_center** – linewidth for the central mean line (zero or None not to have one, the default)

* **linecolor** – a line color for central line

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

#### add_colorbar(param, orientation='vertical', mappable=None, ax=None, colorbar_args: [Mapping](https://docs.python.org/3/library/collections.abc.html#collections.abc.Mapping) = mappingproxy({}), \*\*ax_args)

Adds a color bar to the given plot.

* **Parameters:**

* **param** – a [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) with label for the parameter the color bar is describing

* **orientation** – The orientation of the color bar (default: ‘vertical’)

* **mappable** – the thing to color, defaults to current scatter

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance to add to (defaults to current plot)

* **colorbar_args** – optional arguments for [`colorbar()`](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.colorbar.html#matplotlib.pyplot.colorbar)

* **ax_args** –

extra arguments -

**color_label_in_axes** - if True, label is not added (insert as text label in plot instead)

* **Returns:**

The new [`Colorbar`](https://matplotlib.org/stable/api/colorbar_api.html#matplotlib.colorbar.Colorbar) instance

#### add_colorbar_label(cb, param, label_rotation=None)

Adds a color bar label.

* **Parameters:**

* **cb** – a [`Colorbar`](https://matplotlib.org/stable/api/colorbar_api.html#matplotlib.colorbar.Colorbar) instance

* **param** – a [`ParamInfo`](paramnames.md#getdist.paramnames.ParamInfo) with label for the plotted parameter

* **label_rotation** – If set rotates the label (degrees)

#### add_legend(legend_labels, legend_loc=None, line_offset=0, legend_ncol=None, colored_text=None, figure=False, ax=None, label_order=None, align_right=False, fontsize=None, figure_legend_outside=True, \*\*kwargs)

Add a legend to the axes or figure.

* **Parameters:**

* **legend_labels** – The labels

* **legend_loc** – The legend location, default from settings

* **line_offset** – The offset of plotted lines to label (e.g. 1 to not label first line)

* **legend_ncol** – The number of columns in the legend, defaults to 1

* **colored_text** –

- True: legend labels are colored to match the lines/contours

- False: colored lines/boxes are drawn before black labels

* **figure** – True if legend is for the figure rather than the selected axes

* **ax** – if figure == False, the [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance to use; defaults to

current axes.

* **label_order** – minus one to show legends in reverse order that lines were added, or a list giving

specific order of line indices

* **align_right** – True to align legend text at the right

* **fontsize** – The size of the font, default from settings

* **figure_legend_outside** – whether figure legend is outside or inside the subplots box

* **kwargs** – optional extra arguments for legend function

* **Returns:**

a [`matplotlib.legend.Legend`](https://matplotlib.org/stable/api/legend_api.html#matplotlib.legend.Legend) instance

#### add_line(xdata, ydata, zorder=0, color=None, ls=None, ax=None, \*\*kwargs)

Adds a line to the given axes, using [`Line2D`](https://matplotlib.org/stable/api/_as_gen/matplotlib.lines.Line2D.html#matplotlib.lines.Line2D)

* **Parameters:**

* **xdata** – a pair of x coordinates

* **ydata** – a pair of y coordinates

* **zorder** – Z-order for Line2D

* **color** – The color of the line, uses settings.axis_marker_color by default

* **ls** – The line style to be used, uses settings.axis_marker_ls by default

* **ax** – optional [`Axes`](https://matplotlib.org/stable/api/_as_gen/matplotlib.axes.Axes.html#matplotlib.axes.Axes) instance (or y,x subplot coordinate)

to add to (defaults to current plot or the first/main plot if none)

* **kwargs** – Additional arguments for [`Line2D`](https://matplotlib.org/stable/api/_as_gen/matplotlib.lines.Line2D.html#matplotlib.lines.Line2D)

#### add_param_markers(param_value_dict: [dict](https://docs.python.org/3/library/stdtypes.html#dict)[[str](https://docs.python.org/3/library/stdtypes.html#str), [Iterable](https://docs.python.org/3/library/collections.abc.html#collections.abc.Iterable)[[float](https://docs.python.org/3/library/functions.html#float)] | [float](https://docs.python.org/3/library/functions.html#float)], , color=None, ls=None, lw=None)

Adds vertical and horizontal lines on all subplots marking some parameter values.

* **Parameters:**

* **param_value_dict** – dictionary of parameter names and values to mark (number or list)

* **color** – optional color of the marker

* **ls** – optional line style of the marker

* **lw** – optional line width.